Let is the input space, and is the output space. Labeled example: . Interpretation:

- represents the description or measurements of an object;

- is the category to which that object belongs.

Loss

Assume there is a distribution over space of labeled examples .

- is unknown, but it represents the population we care about;

- Correct labeling function: and that ;

- We define the Loss of the classifier

True Risk

The true risk of a classifier is the probability that not predict correctly on

In other words, the true risk is the expected value of the loss

Bayes Classifier To find , we try to minimize the True Risk , such that As we already know, this is Bayes Classifier.

Bayes Risk

Donate by the Bayes classifier

- For the Bayes Risk it holds for any and , where refer to the set of all possible classifiers.

- You cannot improve over the Bayes Risk.

Batch Learning

We do not know and we need to estimate from the data

- Learner’s input: Training data

- Learner’s output: A prediction rule

The goal of learner is that

h shoud be correct on future examples.

IID condition

Key assumption: an i.i.d.(identically and independently distribution) sample from .

This assumption is the

This assumption is the connection between what we've seen in the past and what we expect to see in the future.

Empirical Risk

Calculate Empirical Risk by benchmarking the prediction against ground truth:

where is the indicator function means the Loss function here is 0-1 Loss.

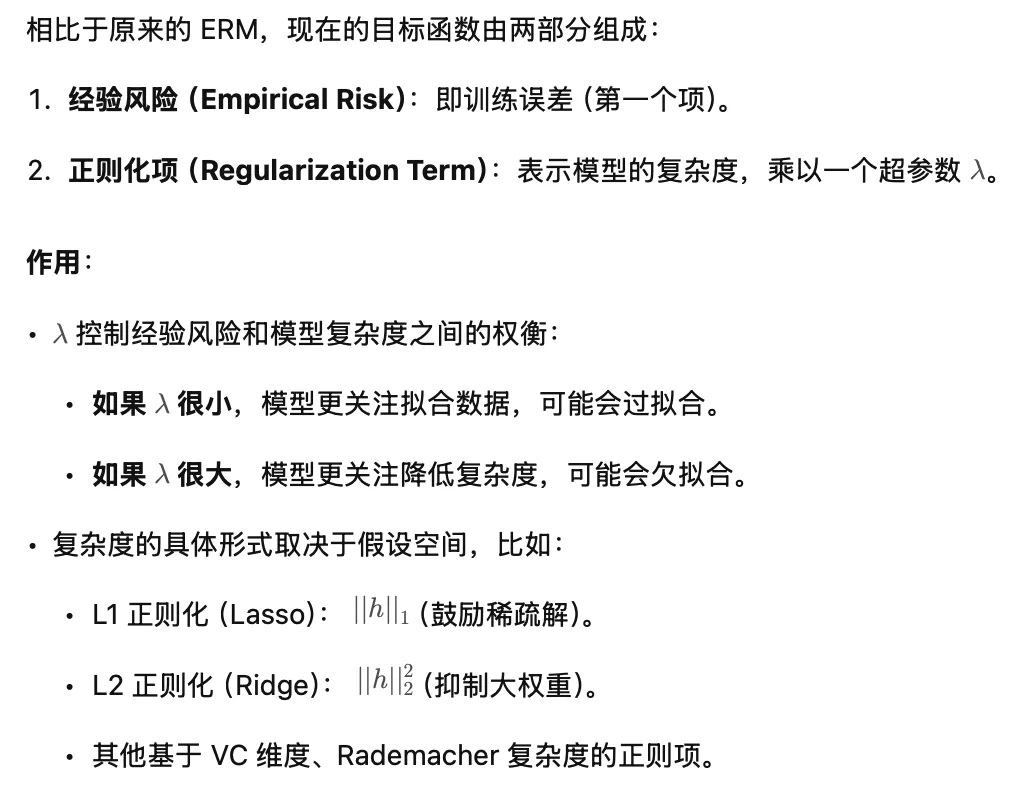

Probably Approximately Correct (PAC) Learning

Can Only Be Probably Correct

Claim: Even when , we can’t hope to find s.t. . Relaxation: , where is user-specified

Can Only Be Approximately Correct

Claim: No algorithm can guarantee for sure. Relaxation: Allow the alg. to fail with probability , where is user-specified.

- The learner doesn’t know and Bayes predictor .

- The learner specifies the accuracy parameter and confidence parameter .

- The number of examples can depend on the value of and , which we call it ); but not depend on distribution or Bayes function.

- Learner should output a hypothesis s.t. .

That is PAC Learning.

Realizability Assumption

Assume that, for a given class of functions , there exists such that , which implies that is the Bayes classifier.

is called the sample complexity of learning .

is called the sample complexity of learning .

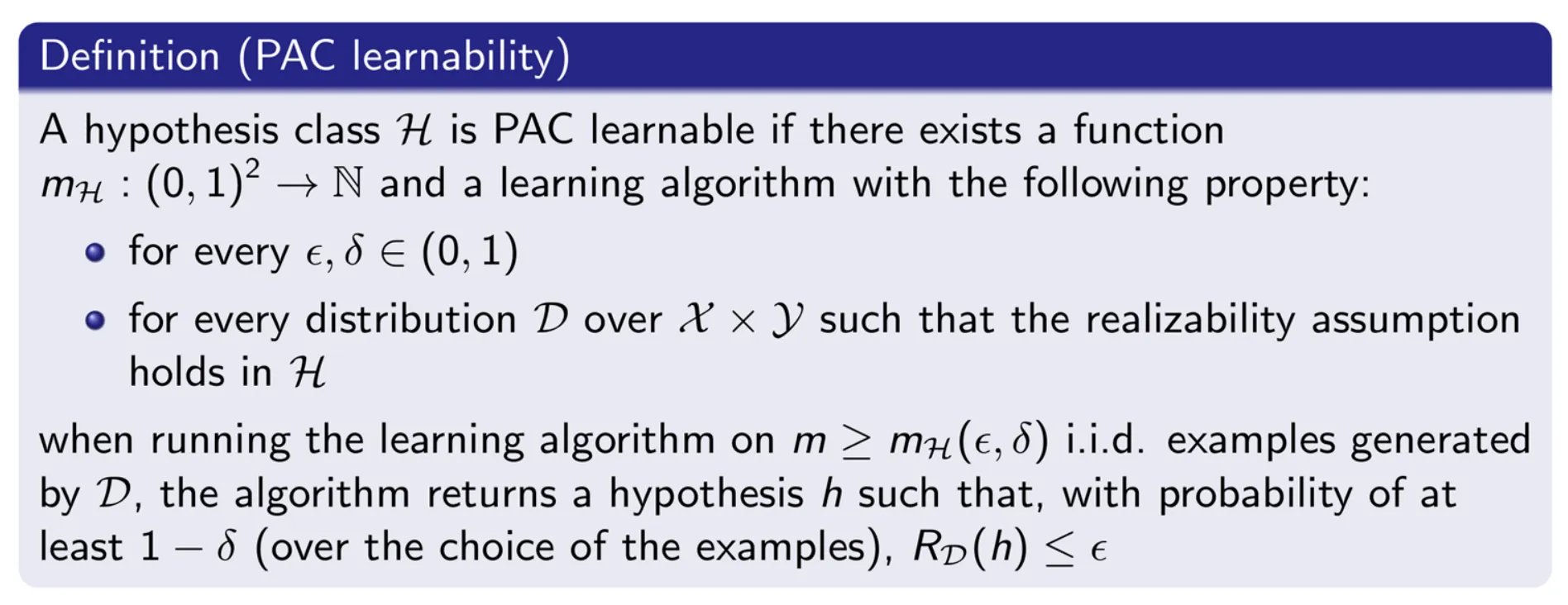

PAC Learnability 的核心思想是:如果一个假设类是 PAC Learnable,意味着我们可以通过有限数量的样本来学到一个接近最优的假设,并保证其泛化误差可控。

With more constraints,

With more constraints,

for example, the “0-1 Loss” is okay for that, but square Loss isn’t because it not bounded.

for example, the “0-1 Loss” is okay for that, but square Loss isn’t because it not bounded.

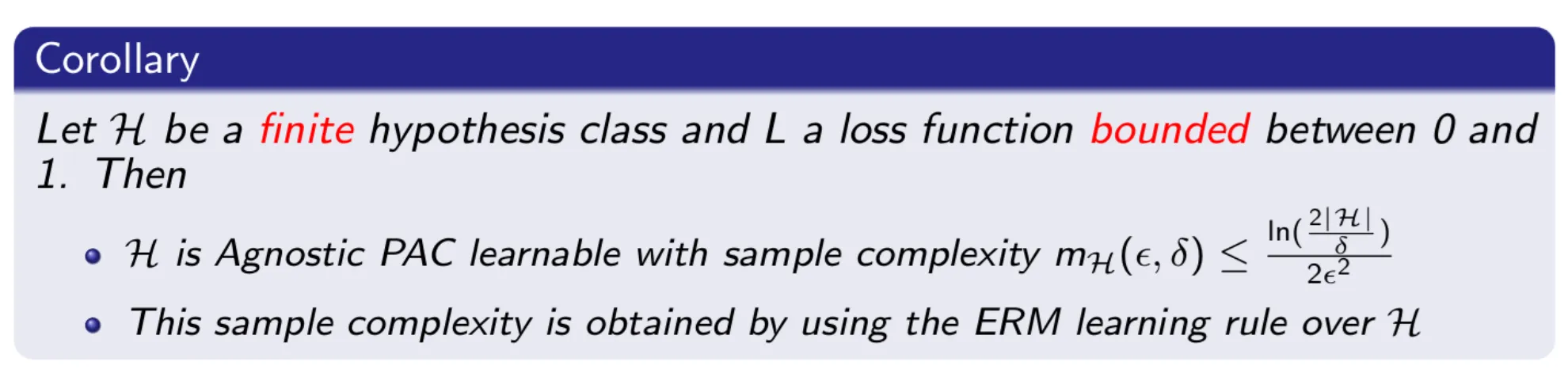

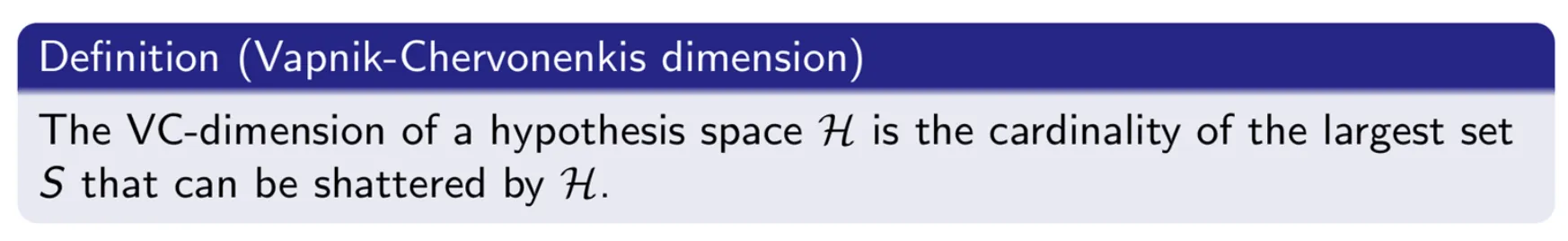

Shattering & VC-dimension

Which are the important concepts to measure complexity of hypothesis space.

对于一个假设集合(Hypothesis Class),如果它能够在一个大小为 的数据集上实现所有可能的二分类标记方式,那么我们说 shatter[v.粉碎] 了该数据集。i.e. 假设有一个二分类模型(例如直线分类器)和一个数据集,如果能找到某种参数设定,使得该模型能够对数据点的所有可能标签分配方式都正确分类,则称该模型 shatter 了这个数据集。

对于一个假设集合(Hypothesis Class),如果它能够在一个大小为 的数据集上实现所有可能的二分类标记方式,那么我们说 shatter[v.粉碎] 了该数据集。i.e. 假设有一个二分类模型(例如直线分类器)和一个数据集,如果能找到某种参数设定,使得该模型能够对数据点的所有可能标签分配方式都正确分类,则称该模型 shatter 了这个数据集。

假设空间 的 VC 维度( VC() )是 可以粉碎的最大数据点数。

假设空间 的 VC 维度( VC() )是 可以粉碎的最大数据点数。

In order to prove that is at least , we need to show only that there’s at least one set of size that can be shattered by .

Linear Classifier

Consider as the set of linear classifiers in dimensions, then .

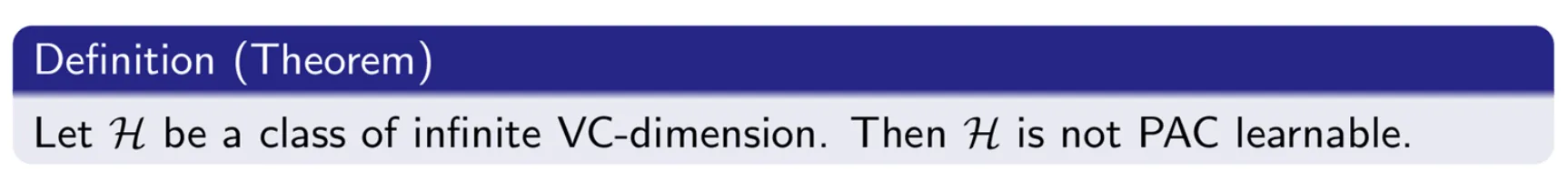

Not PAC Learnable

Infinite Hypothesis Classes

- 理论上,假设类可以是无限的,但计算机实现时会受限于离散化存储,任何模型参数都是以浮点数存储的,对于 的浮点数就只有 种可能的取值,因此假设类在计算上是有限的。

- 对于具有 个参数的模型,假设类的大小可以估计为 ,并且经验法则告诉我们,至少需要 个样本来有效训练模型。

Overfitting

The model has pretty excellent performance on the training set, but poor performance on the test data or the real world.

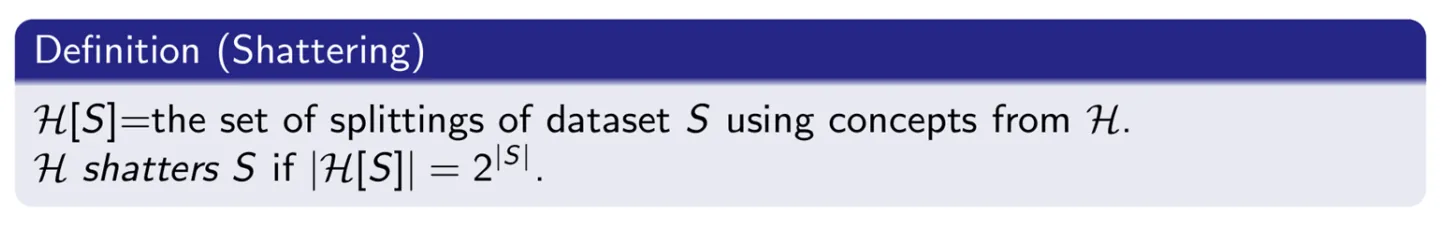

Regularization ERM (Empirical Risk Minimization)